Build a winning scraper

Speed up your development with a runtime environment built to scrape, unlock, and scale web data collection

- 73+ ready-made JavaScript functions

- 38K+ scrapers built by our customers

- 195 countries with proxy endpoints

- 99.99% uptime for reliable scraping

Trusted by 20,000+ customers worldwide

Continuous scraping at your fingertips

Features

Scraping-ready functions

Choose from 70+ scraping-proof code templates and implement custom changes that match your specific use.

Online dev environment

Fully-hosted IDE to allow scalable CI/CD processes.

Embedded debugger

Review logs and integrate with Chrome DevTools to nail down root cause analysis.

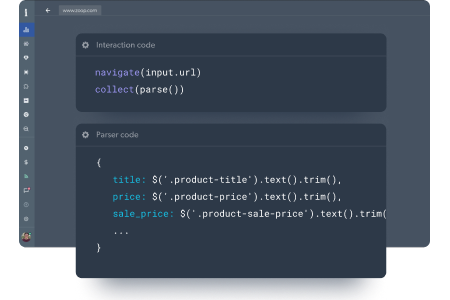

JavaScript browser interaction

Control browser actions using JavaScript protocols.

Built-in parser

Write your parsers in cheerio and run live previews to see what data it produced.

Observability dashboard

Track, measure and compare between your scrapers and jobs in a single dashboard.

Auto-scaling infrastructure

Invest-less in hardware and software maintenance and shift your compute processes into the cloud.

Proxy auto-pilot

Run your scrapers as a real-user via any geo-location with built-in fingerprinting, automated retries, CAPTCHA solving, and more.

Integration

Trigger scrapers on a schedule or by API and connect to numerous 3rd party service providers.

Develop more, maintain less

Reduce mean time to deliver by using ready-made JavaScript functions and online IDE to build your web scrapers at scale.

Powered by an award-winning proxy network

Unlock the most complex anti-bots using an embedded AI-based web unlocker built on top of a hyper-extensive IP pool. Over 150M proxy IPs, best-in-class technology and the ability to target any country, city, ZIP Code, carrier, & ASN make our premium proxy services a top choice for developers.

Industry Leading Compliance

Our privacy practices comply with data protection laws, including the EU data protection regulatory framework, GDPR, and CCPA – respecting requests to exercise privacy rights and more.

Starting from $1 / 1000 page loads

- Pay as you go plan available

- Volume discounts

- No setup fees or hidden fees

Data collection process

Uncover an entire list and hierarchy of website URLs matching your need in a target website.Use ready made functions for the site search and clicking the categories menu, such as:

- Data extraction from lazy loading search (load_more(), capture_graphql())

- Pagination functions for product discovery

- Support pushing new pages to the queue for parallel scraping by using rerun_stage() or next_stage()

Build a scraper for any page, using fixed URLs, or dynamic URLs using an API or straight from the discovery phase. Leverage the following functions to build a web scraper faster:

- HTML parsing (in cheerio)

- Capture browser network calls

- Prebuilt tools for GraphQL APIs

- Scrape the website JSON APIs

Run tests to ensure you get the data you expect

- Define the schema of how you want to receive the data

- Custom validation code to show that the data is in the right format

- Data can include JSON, media files, and browser screenshots

Deliver the data via all the popular storage destinations:

- API

- Amazon S3

- Webhook

- Microsoft Azure

- Google Cloud PubSub

- SFTP

Want to skip scraping, and just get a dataset?

Designed for Any Use Case

Scrape eCommerce websites

- Configure dynamic pricing models

- Identify matching products in real-time

- Track changes in consumer demand

- Anticipate the next big product trends

- Get real-time alerts when new brands are introduced

Scrape Social Media

- Scrape likes, posts, comments, hashtags, & videos

- Discover influencers by # of followers, industry, and more

- Spot shifts in popularity by monitoring likes, shares, etc.

- Improve existing campaigns & create more effective ones

- Analyze product reviews and consumer feedback

Scrape promotional websites

- Lead generation & jobs website scraper

- Scrape public profiles to update your CRM

- Identify key companies and employee movement

- Evaluate company growth and industry trends

- Analyze hiring patterns and in-demand skill sets

Scrape travel websites

- Compare prices of hotel & travel competitors

- Set dynamic pricing models in real-time

- Find your competitors new deals & promotions

- Determine the right price for every travel promotion

- Anticipate the next big travel trends

Scrape real estate websites

- Compare properties pricing

- Keep an updated database of property listings

- Forecast sales and trends to improve ROI

- Analyze negative and positive rental cycles of the market

- Locate properties with the highest rental rates

Web Scraper Inspiration

FAQs

What are Serverless Functions

Serverless Functions are a fully hosted cloud solution designed for developers to build fast and scalable scrapers in a JavaScript coding environment. Built on Bright Data’s unblocking proxy solution, the IDE includes ready-made functions and code templates from major websites – reducing development time and ensuring easy scaling.

Who should use Serverless Functions?

Ideal for customers who have development capabilities (in-house or outsourced). Serverless Functions users have maximum control and flexibility, without needing to maintain infrastructure, deal with proxies and anti-blocking systems. Our users can easily scale and develop scrapers fast using pre-built JavaScript functions and code templates.

What does the Serverless Functions trial include?

- unlimited tests

- access to existing code templates

- access to pre-built JavaScript functions

- publish 3 scrapers, up to 100 records each

**The free trial is limited by the number of scraped records.

In what format is the data delivered?

Choose from JSON, NDJSON, CSV, or Microsoft Excel.

Where is the data stored?

You can select your preferred delivery and storage method: API, Webhook, Amazon S3, Google Cloud, Google Cloud Pubsub, Microsoft Azure, or SFTP.

Why is it important to have an unblocking solution when web scraping?

Having an unblocking solution when scraping is important because many websites have anti-scraping measures that block the scraper’s IP address or require CAPTCHA solving. The unblocking solution implemented within Bright Data’s Web Scraper IDE is designed to bypass these obstacles and continue gathering data without interruption.

What kind of data can I scrape?

Publicly available data. Due to our commitment to privacy laws, we do not allow scraping behind log-ins.